Tired of juggling multiple Terraform configurations? Level up your infrastructure management experience with this comprehensive guide. Discover how to harness the power of variables, workspaces, and locals to streamline your deployments and simplify your workflow.

Key takeaways:

- Variables: Store and manage dynamic infrastructure details effortlessly.

- Workspaces: Isolate state files for multiple projects within a single Terraform configuration.

- Locals: Create conditional configurations to tailor your deployments to specific environments.

We’ll demonstrate these concepts through a real-world Google Cloud Platform (GCP) example, deploying a VPC, subnet, and Compute Engine VM with distinct configurations for dev and test environments. By the end, you’ll be equipped to write cleaner, more efficient Terraform code that scales with your infrastructure needs.

Table of Contents

What You’ll Need:

- Two GCP projects with sufficient permissions granted to your account — I’m using vpt-tf-sandbox-dev and vpt-tf-sandbox-test as the project names. Note the -dev and -test — we’re going to use that concept with Terraform.

- Terraform installation

- IDE (I like VS Code with the Terraform extension)

Download the Source Code:

Download the source code from VicsProTips on GitHub! (Note this is part of a larger Terraform Sandbox)

Introduction to Locals, Data Blocks, and Count (src: /intro)

Scenario: Imagine we have dev and test environments (I’m skipping prod since dev and test are sufficient to show the examples — extrapolate to prod). We want to deploy infrastructure to dev and test but we want to configure what exactly is being deployed to each project based on if it is dev or test. This example is not intended to be a demonstration of ‘best practices’ but rather a few examples that you can gain insights from and apply to your use case.

We’ll begin by introducing a few features within Terraform that will be beneficial to us for creating dynamic configurations. We’re going to cover the following:

- locals

- data blocks

- count

Locals

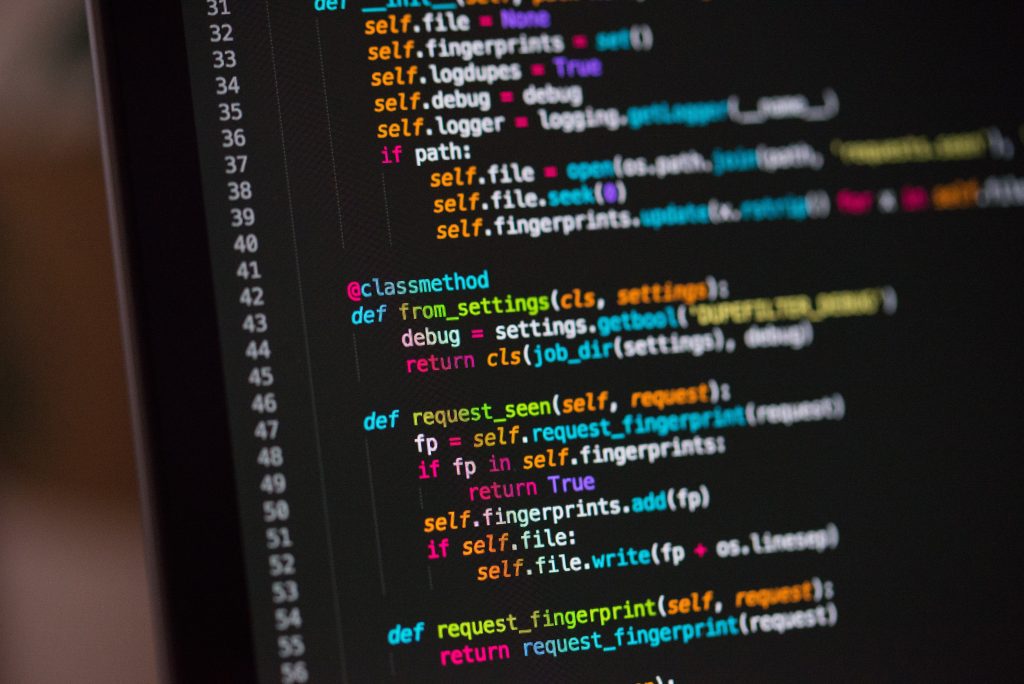

It’s time to introduce the concept of “locals” in Terraform. Locals allow us to do dynamic configurations and here is the official documentation. In this case we’re going to define a “mode” variable that will be a string that is set to dev | test | prod based string manipulation via Terraform. Here’s a look at the locals.tf we’ll be using.

# locals.tf

locals {

# Determine if we're using dev, test, or prod projects

mode = "${element(split("-", data.google_project.project.name),3)}"

}

data "google_project" "project" {

project_id = var.project-id

}To summarize the above block, we’re defining a mode variable based on string manipulation of the actual project name. We are going to get the project name via a data block based on the pre-existing project-id. Keep in mind that GCP may add extra distinguishing digits to a project-name when making the corresponding project-id so we use the above data block to get the project name based off of a unique project-id.

Data blocks

Data blocks are for resources that exist outside of Terraform, ie things that already exist. For example, if we allocated a globally static IP via GCP console and want to reference it within Terraform, we’d define a corresponding data block so that Terraform is aware of it and can use the resource within Terraform configs. Read more about Terraform data blocks from the official documentation.

count

I’m going to demonstrate a way for you to create a resource only if a condition is met, ie dev, in our example. For these situations we’re going to use an additional Terraform feature called ‘count‘ that I’ll demonstrate below. Refer to documentation on count. We’re augmenting count with a conditional expression which you can also read about here.

In the code below the VPC is created only if we’re in ‘dev’ mode. Recall local.mode was defined in our locals.tf covered above.

# vpc.tf

resource "google_compute_network" "vpc" {

count = local.mode == "dev" ? 1 : 0 # only create if mode matches string

depends_on = [ google_project_service.enable-compute-api ]

project = var.project-id

name = var.vpc-name

auto_create_subnetworks = false

}

resource "google_compute_subnetwork" "vpc-subnet" {

depends_on = [ google_compute_network.vpc ]

count = local.mode == "dev" ? 1 : 0 # only create if mode matches string

project = var.project-id

name = "${var.vpc-name}-${var.region}"

network = google_compute_network.vpc[count.index].id

ip_cidr_range = "10.0.0.0/16"

region = var.region

}Try out the Intro!

The source code and example for introducing these features can be found at this VicsProTips GitHub introduction link.

Notes:

- Make sure you have a projects that end in *-dev and *-test and update the locals.tf file as needed. Update the string logic in the “mode” section where the string manipulation separator logic is to match your use case.

- Make sure to update the backend.tf to match your bucket name.

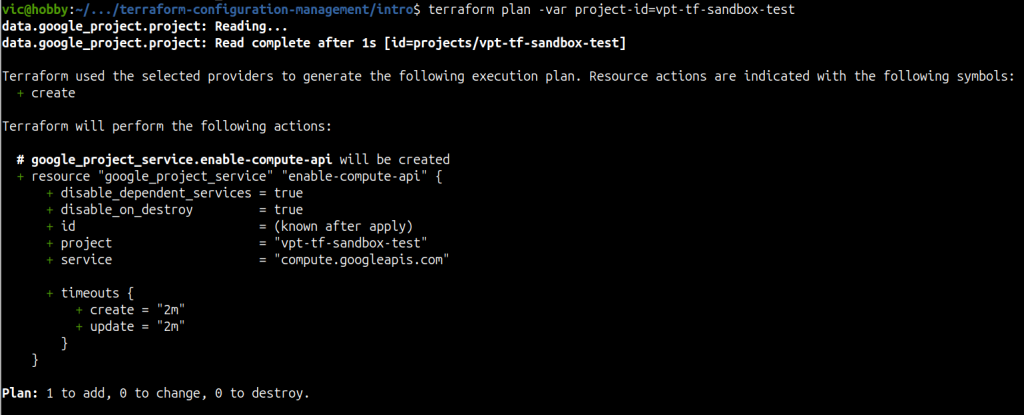

Let’s first check that our VPC is not created when using projects that end in -test.

vpt-tf-sandbox-test

For test, we don’t expect to see a VPC created. Let’s verify as shown below.

terraform plan -var project-id=vpt-tf-sandbox-testPro Tip: We can provide a value for a variable on the command line as seen above.

Using the project name of ‘vpt-tf-sandbox-test’ we can see that Terraform is not going to create a VPC. Let’s see what happens with the vpt-tf-sandbox-dev project.

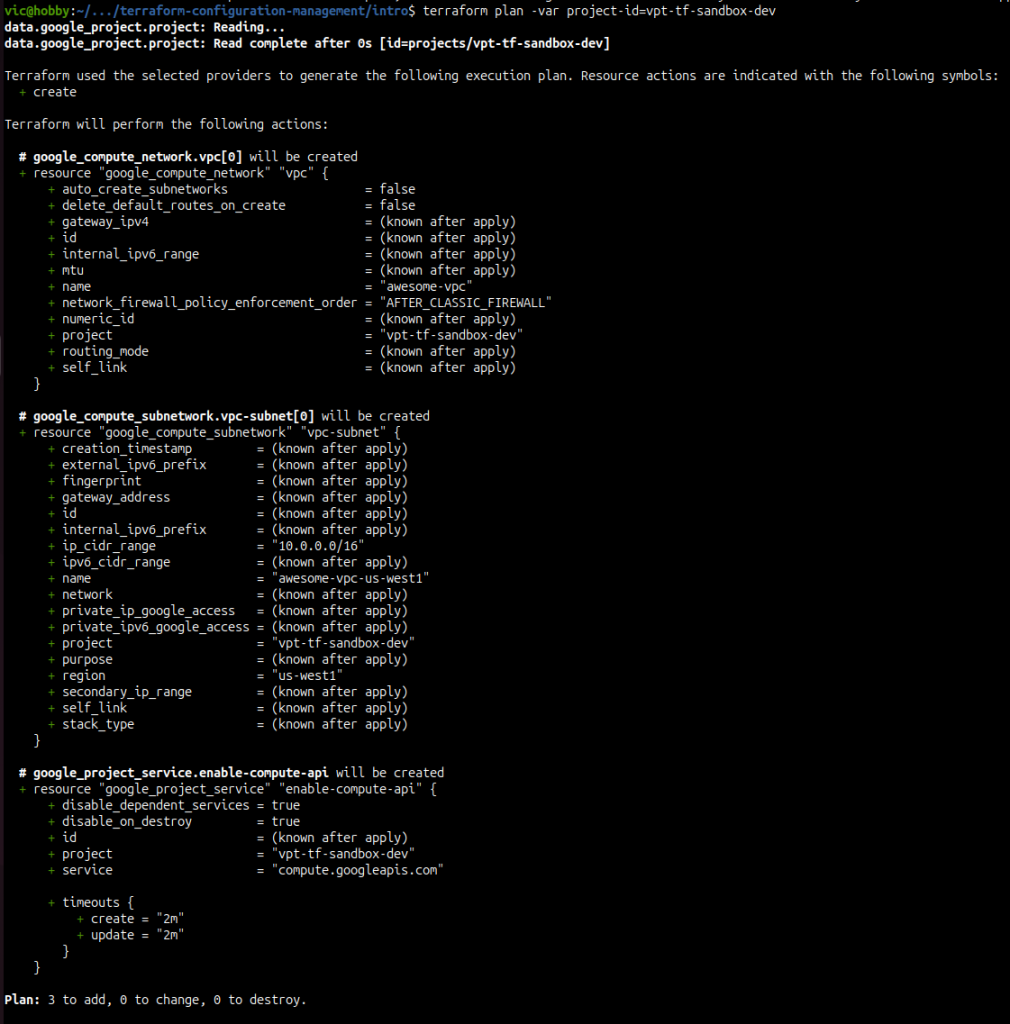

vpt-tf-sandbox-dev

For a dev project we expect to see a VPC created. Let’s verify below.

terraform plan -var project-id=vpt-tf-sandbox-dev

We have verified that based on the project name of vpt-tf-sandbox-dev that Terraform will create a VPC. Recall this is because we created a locals definition and added the concept of “mode” which we then use in the vpc.tf logic via count with a conditional operation. Pretty awesome right!?!?!

Let’s build on these concepts and further define infrastructure for our dev and test environments.

Advanced Example (src: /advanced-w-variables)

Let’s define our use case by outlining what we want to deploy per environment:

- dev:

- VPC

- Compute Engine VM (e2-micro, us-west1-a, debian-11)

- GCS bucket

- test:

- VPC

- Compute Engine VM (e2-medium, us-west1-b, debian-12)

This use case is for demonstration purposes only to illustrate Terraform configuration management options available via Terraform itself.

We’ve used variables in other posts — namely here and here but when using them to define project-ids in a live environment to distinguish between multiple projects I stress the following:

CAUTION: Using variables.tf to distinguish between projects is DANGEROUS due to how Terraform manages state files. Do not use this approach in a live environment with multiple projects. Use the Advanced with Workspaces section that I cover towards the end of this post. There’s much to learn from this variables section but be sure to read through the ENTIRE blog.

We’re going to utilize locals to a much greater extent as we build out this example. We’re going to add support for resource definitions for items such as our GCS bucket and we’re going to implement support for configuring a virtual machine based on if we’re deploying to a dev or test project. Lots to cover.

Source code is available here on VicsProTips GitHub.

Let’s go over each file in our Terraform config that builds out our use case.

locals.tf

Here is our newly crafted locals.tf file that supports the customization we’re implementing. We’ll go over the locals.tf in detail below.

locals {

# add support for project_id so we can swap between variables and workspaces

project-id = var.project-id

# Determine if we're using dev, test, or prod projects

mode = "${element(split("-", data.google_project.project.name),3)}"

# bucket

gcs = {

name = "my-bucket-${data.google_project.project.name}"

location = var.region

}

# compute engine VMs with dev and test configs

vms = {

name = "vicsprotips-${local.mode}"

dev = {

zone = "${var.region}-a"

machine_type = "e2-micro"

boot_disk = {

initialize_params = {

image = "debian-cloud/debian-11"

}

}

}

test = {

zone = "${var.region}-b"

machine_type = "e2-small"

boot_disk = {

initialize_params = {

image = "debian-cloud/debian-12"

}

}

}

}

}

data "google_project" "project" {

project_id = local.project-id

}At the top of the file we see a setting for project-id.

locals {

# add support for project_id so we can swap between variables and workspaces

project-id = var.project-id

...We’ll see as we dig into each *.tf file that we’re making greater references to the locals. As we standardize more on the locals, we can set ourselves up for future success by adding a single location where we can set the project-id via variables or workspaces. We’ve not discussed Terraform workspaces but I’ll show an example towards the end of this blog post. For now, here is the official documentation about Terraform workspaces.

Next we see the code that sets the mode based on the project name. Notice how the data block has been updated to reference the local.project-id.

locals {

...

# Determine if we're using dev, test, or prod projects

mode = "${element(split("-", data.google_project.project.name),3)}"

...

}

data "google_project" "project" {

project_id = local.project-id

}

GCS Bucket locals

Next up is the section for our GCS bucket.

locals {

...

# bucket

gcs = {

name = "my-bucket-${data.google_project.project.name}"

location = var.region

}

...We’re defining what the bucket name will be and defining a location. Lets look at our gcs.tf to see how locals is used.

GCS Resource Block — gcs.tf

Let’s look at our gcs.tf that defines the bucket we want to deploy only to the dev project. Recall from our intro example that we can use count and a conditional expression to create a resource if a specific condition is met, in our case, only create a bucket if we’re deploying to a dev project (ie, vpt-tf-sandbox-dev)

# gcs.tf

resource "google_storage_bucket" "my-bucket" {

count = local.mode == "dev" ? 1 : 0

project = local.project-id

name = local.gcs.name

location = local.gcs.location

# force_destroy: When deleting a bucket, this boolean option will delete all contained objects.

# If you try to delete a bucket that contains objects, Terraform will fail that run.

force_destroy = true

uniform_bucket_level_access = true

public_access_prevention = "enforced"

autoclass {

enabled = true

}

}

Reviewing the gcs.tf file we see that we’re making extensive use of the locals definitions for count and project. We can also see that we’re pulling the GCS bucket name and location from the gcs block we defined in our locals. Utilizing the locals to define deployment variables provides us with an opportunity to consolidate and organize deployment options so that the resource block can be written once and left unmodified. The “tweaks” can be done via the locals definition.

Pro Tip: You can define multiple locals files, ie, we could’ve defined a gcs-locals.tf, a project-locals.tf, etc, alongside our locals.tf and placed the relevant bits into the gcs-locals.tf and take it out of locals.tf. This is helpful as you deploy more and more infrastructure to have a local definition more tightly coupled via naming convention vs a mega locals.tf.

Compute Engine Locals for Dev and Test

Now we’re going to define our deployments for a dev and test compute engine vm. We’re going to make use of the local.mode and we’re going to make dedicated definitions for what a dev and test compute engine VM should look like. Let’s dive in.

locals {

...

# compute engine VMs with dev and test configs

vms = {

name = "vicsprotips-${local.mode}"

dev = {

zone = "${var.region}-a"

machine_type = "e2-micro"

boot_disk = {

initialize_params = {

image = "debian-cloud/debian-11"

}

}

}

test = {

zone = "${var.region}-b"

machine_type = "e2-small"

boot_disk = {

initialize_params = {

image = "debian-cloud/debian-12"

}

}

}

}

}Notice in the above locals that we’re defining a vms block that has a general setting for name that is set via a hard coded name and the mode. Then we define a dev = {…} and test = {…} block within vms. This is how we can define detailed settings for each of the defined modes. Remember that although I’m only demonstrating with dev and test, you can extend the concept to include a staging, prod, etc to suit your use case.

Let’s now look at how we use these settings in a Compute Engine resource block.

Compute Engine Resource Block — gce.tf

Here is our gce.tf that references the locals definitions above.

# gce.tf

resource "google_compute_instance" "vm_machine" {

depends_on = [ google_compute_network.vpc ]

project = local.project-id

name = local.vms.name

zone = local.vms[local.mode].zone

machine_type = local.vms[local.mode].machine_type

boot_disk {

initialize_params {

image = local.vms[local.mode].boot_disk.initialize_params.image

}

}

network_interface {

subnetwork = google_compute_subnetwork.vpc-subnet.name

}

}Reviewing the above block we see that we’re referencing the locals for the project-id as in the gcs code. We also see that we’re accessing the name field that we defined in the vms block of our locals definition. Now it gets very interesting — we’re able to reference the dev or test definitions under the vms block based on the mode. This means that we can have completely distinct configurations per mode simply by defining the modes in our locals and then referencing the locals via our resource definitions.

Let’s verify that our settings work as expected.

Verifying Configuration “dev”

The following output shows that Terraform is going to create the following:

- VPC and subnet

- VM (e2-micro, us-west1-a, debian 11)

- GCS bucket

$ terraform apply -var project-id=vpt-tf-sandbox-dev

data.google_project.project: Reading...

data.google_project.project: Read complete after 1s [id=projects/vpt-tf-sandbox-dev]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# google_compute_instance.vm_machine will be created

+ resource "google_compute_instance" "vm_machine" {

...

+ machine_type = "e2-micro"

+ metadata_fingerprint = (known after apply)

+ min_cpu_platform = (known after apply)

+ name = "vicsprotips-dev"

+ project = "vpt-tf-sandbox-dev"

...

+ zone = "us-west1-a"

+ boot_disk {

...

+ initialize_params {

+ image = "debian-cloud/debian-11"

...

}

}

...

+ network_interface {

...

+ subnetwork = "awesome-vpc-us-west1"

...

}

...

}

# google_compute_network.vpc will be created

+ resource "google_compute_network" "vpc" {

...

+ name = "awesome-vpc"

...

}

# google_compute_subnetwork.vpc-subnet will be created

+ resource "google_compute_subnetwork" "vpc-subnet" {

...

+ name = "awesome-vpc-us-west1"

+ project = "vpt-tf-sandbox-dev"

+ region = "us-west1"

...

}

# google_project_service.enable-compute-api will be created

+ resource "google_project_service" "enable-compute-api" {

...

+ project = "vpt-tf-sandbox-dev"

+ service = "compute.googleapis.com"

...

}

# google_storage_bucket.my-bucket[0] will be created

+ resource "google_storage_bucket" "my-bucket" {

...

+ location = "US-WEST1"

+ name = "my-bucket-vpt-tf-sandbox-dev"

+ project = "vpt-tf-sandbox-dev"

...

}

Plan: 5 to add, 0 to change, 0 to destroy.

Verifying Configuration “test”

Now let’s run the following to check out our test logic

terraform apply -var project-id=vpt-tf-sandbox-testHere is the output of our command. Read it closely.

$ terraform apply -var project-id=vpt-tf-sandbox-test

data.google_project.project: Reading...

google_project_service.enable-compute-api: Refreshing state... [id=vpt-tf-sandbox-dev/compute.googleapis.com]

data.google_project.project: Read complete after 1s [id=projects/vpt-tf-sandbox-test]

google_storage_bucket.my-bucket[0]: Refreshing state... [id=my-bucket-vpt-tf-sandbox-dev]

google_compute_network.vpc: Refreshing state... [id=projects/vpt-tf-sandbox-dev/global/networks/awesome-vpc]

google_compute_subnetwork.vpc-subnet: Refreshing state... [id=projects/vpt-tf-sandbox-dev/regions/us-west1/subnetworks/awesome-vpc-us-west1]

google_compute_instance.vm_machine: Refreshing state... [id=projects/vpt-tf-sandbox-dev/zones/us-west1-a/instances/vicsprotips-dev]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

- destroy

-/+ destroy and then create replacement

Terraform will perform the following actions:

# google_compute_instance.vm_machine must be replaced

-/+ resource "google_compute_instance" "vm_machine" {

...

~ id = "projects/vpt-tf-sandbox-dev/zones/us-west1-a/instances/vicsprotips-dev" -> (known after apply)

~ machine_type = "e2-micro" -> "e2-small"

~ name = "vicsprotips-dev" -> "vicsprotips-test" # forces replacement

~ project = "vpt-tf-sandbox-dev" -> "vpt-tf-sandbox-test" # forces replacement

~ zone = "us-west1-a" -> "us-west1-b" # forces replacement

# (4 unchanged attributes hidden)

~ boot_disk {

~ device_name = "persistent-disk-0" -> (known after apply)

+ disk_encryption_key_sha256 = (known after apply)

+ kms_key_self_link = (known after apply)

~ source = "https://www.googleapis.com/compute/v1/projects/vpt-tf-sandbox-dev/zones/us-west1-a/disks/vicsprotips-dev" -> (known after apply)

# (3 unchanged attributes hidden)

~ initialize_params {

- enable_confidential_compute = false -> null

~ image = "https://www.googleapis.com/compute/v1/projects/debian-cloud/global/images/debian-11-bullseye-v20240815" -> "debian-cloud/debian-12" # forces replacement

~ labels = {} -> (known after apply)

~ provisioned_iops = 0 -> (known after apply)

~ provisioned_throughput = 0 -> (known after apply)

- resource_manager_tags = {} -> null

~ size = 10 -> (known after apply)

~ type = "pd-standard" -> (known after apply)

# (1 unchanged attribute hidden)

}

}

~ confidential_instance_config {

+ allow_stopping_for_update = (known after apply)

+ can_ip_forward = (known after apply)

+ cpu_platform = (known after apply)

+ current_status = (known after apply)

+ deletion_protection = (known after apply)

+ description = (known after apply)

+ desired_status = (known after apply)

+ effective_labels = (known after apply)

+ enable_display = (known after apply)

+ guest_accelerator = (known after apply)

+ hostname = (known after apply)

+ id = (known after apply)

+ instance_id = (known after apply)

+ label_fingerprint = (known after apply)

+ labels = (known after apply)

+ machine_type = (known after apply)

+ metadata = (known after apply)

+ metadata_fingerprint = (known after apply)

+ metadata_startup_script = (known after apply)

+ min_cpu_platform = (known after apply)

+ name = (known after apply)

+ project = (known after apply)

+ resource_policies = (known after apply)

+ self_link = (known after apply)

+ tags = (known after apply)

+ tags_fingerprint = (known after apply)

+ terraform_labels = (known after apply)

+ zone = (known after apply)

} -> (known after apply)

~ network_interface {

~ internal_ipv6_prefix_length = 0 -> (known after apply)

+ ipv6_access_type = (known after apply)

+ ipv6_address = (known after apply)

~ name = "nic0" -> (known after apply)

~ network = "https://www.googleapis.com/compute/v1/projects/vpt-tf-sandbox-dev/global/networks/awesome-vpc" -> (known after apply)

~ network_ip = "10.0.0.2" -> (known after apply)

- queue_count = 0 -> null

~ stack_type = "IPV4_ONLY" -> (known after apply)

~ subnetwork = "https://www.googleapis.com/compute/v1/projects/vpt-tf-sandbox-dev/regions/us-west1/subnetworks/awesome-vpc-us-west1" -> "awesome-vpc-us-west1"

~ subnetwork_project = "vpt-tf-sandbox-dev" -> (known after apply)

# (1 unchanged attribute hidden)

}

~ reservation_affinity {

+ allow_stopping_for_update = (known after apply)

+ can_ip_forward = (known after apply)

+ cpu_platform = (known after apply)

+ current_status = (known after apply)

+ deletion_protection = (known after apply)

+ description = (known after apply)

+ desired_status = (known after apply)

+ effective_labels = (known after apply)

+ enable_display = (known after apply)

+ guest_accelerator = (known after apply)

+ hostname = (known after apply)

+ id = (known after apply)

+ instance_id = (known after apply)

+ label_fingerprint = (known after apply)

+ labels = (known after apply)

+ machine_type = (known after apply)

+ metadata = (known after apply)

+ metadata_fingerprint = (known after apply)

+ metadata_startup_script = (known after apply)

+ min_cpu_platform = (known after apply)

+ name = (known after apply)

+ project = (known after apply)

+ resource_policies = (known after apply)

+ self_link = (known after apply)

+ tags = (known after apply)

+ tags_fingerprint = (known after apply)

+ terraform_labels = (known after apply)

+ zone = (known after apply)

} -> (known after apply)

~ scheduling {

+ allow_stopping_for_update = (known after apply)

+ can_ip_forward = (known after apply)

+ cpu_platform = (known after apply)

+ current_status = (known after apply)

+ deletion_protection = (known after apply)

+ description = (known after apply)

+ desired_status = (known after apply)

+ effective_labels = (known after apply)

+ enable_display = (known after apply)

+ guest_accelerator = (known after apply)

+ hostname = (known after apply)

+ id = (known after apply)

+ instance_id = (known after apply)

+ label_fingerprint = (known after apply)

+ labels = (known after apply)

+ machine_type = (known after apply)

+ metadata = (known after apply)

+ metadata_fingerprint = (known after apply)

+ metadata_startup_script = (known after apply)

+ min_cpu_platform = (known after apply)

+ name = (known after apply)

+ project = (known after apply)

+ resource_policies = (known after apply)

+ self_link = (known after apply)

+ tags = (known after apply)

+ tags_fingerprint = (known after apply)

+ terraform_labels = (known after apply)

+ zone = (known after apply)

} -> (known after apply)

- shielded_instance_config {

- enable_integrity_monitoring = true -> null

- enable_secure_boot = false -> null

- enable_vtpm = true -> null

}

}

# google_compute_network.vpc must be replaced

-/+ resource "google_compute_network" "vpc" {

- enable_ula_internal_ipv6 = false -> null

+ gateway_ipv4 = (known after apply)

~ id = "projects/vpt-tf-sandbox-dev/global/networks/awesome-vpc" -> (known after apply)

+ internal_ipv6_range = (known after apply)

~ mtu = 0 -> (known after apply)

name = "awesome-vpc"

~ numeric_id = "6704215090522890952" -> (known after apply)

~ project = "vpt-tf-sandbox-dev" -> "vpt-tf-sandbox-test" # forces replacement

~ routing_mode = "REGIONAL" -> (known after apply)

~ self_link = "https://www.googleapis.com/compute/v1/projects/vpt-tf-sandbox-dev/global/networks/awesome-vpc" -> (known after apply)

# (4 unchanged attributes hidden)

}

# google_compute_subnetwork.vpc-subnet must be replaced

-/+ resource "google_compute_subnetwork" "vpc-subnet" {

~ creation_timestamp = "2024-08-19T12:25:52.017-07:00" -> (known after apply)

+ external_ipv6_prefix = (known after apply)

+ fingerprint = (known after apply)

~ gateway_address = "10.0.0.1" -> (known after apply)

~ id = "projects/vpt-tf-sandbox-dev/regions/us-west1/subnetworks/awesome-vpc-us-west1" -> (known after apply)

+ internal_ipv6_prefix = (known after apply)

+ ipv6_cidr_range = (known after apply)

name = "awesome-vpc-us-west1"

~ network = "https://www.googleapis.com/compute/v1/projects/vpt-tf-sandbox-dev/global/networks/awesome-vpc" # forces replacement -> (known after apply) # forces replacement

~ private_ip_google_access = false -> (known after apply)

~ private_ipv6_google_access = "DISABLE_GOOGLE_ACCESS" -> (known after apply)

~ project = "vpt-tf-sandbox-dev" -> "vpt-tf-sandbox-test" # forces replacement

~ purpose = "PRIVATE" -> (known after apply)

~ secondary_ip_range = [] -> (known after apply)

~ self_link = "https://www.googleapis.com/compute/v1/projects/vpt-tf-sandbox-dev/regions/us-west1/subnetworks/awesome-vpc-us-west1" -> (known after apply)

~ stack_type = "IPV4_ONLY" -> (known after apply)

# (5 unchanged attributes hidden)

}

# google_project_service.enable-compute-api must be replaced

-/+ resource "google_project_service" "enable-compute-api" {

~ id = "vpt-tf-sandbox-dev/compute.googleapis.com" -> (known after apply)

~ project = "vpt-tf-sandbox-dev" -> "vpt-tf-sandbox-test" # forces replacement

# (3 unchanged attributes hidden)

# (1 unchanged block hidden)

}

# google_storage_bucket.my-bucket[0] will be destroyed

# (because index [0] is out of range for count)

- resource "google_storage_bucket" "my-bucket" {

- default_event_based_hold = false -> null

- effective_labels = {} -> null

- enable_object_retention = false -> null

- force_destroy = true -> null

- id = "my-bucket-vpt-tf-sandbox-dev" -> null

- location = "US-WEST1" -> null

- name = "my-bucket-vpt-tf-sandbox-dev" -> null

- project = "vpt-tf-sandbox-dev" -> null

- project_number = 61400760433 -> null

- public_access_prevention = "enforced" -> null

- requester_pays = false -> null

- self_link = "https://www.googleapis.com/storage/v1/b/my-bucket-vpt-tf-sandbox-dev" -> null

- storage_class = "STANDARD" -> null

- terraform_labels = {} -> null

- uniform_bucket_level_access = true -> null

- url = "gs://my-bucket-vpt-tf-sandbox-dev" -> null

- autoclass {

- enabled = true -> null

- terminal_storage_class = "NEARLINE" -> null

}

- soft_delete_policy {

- effective_time = "2024-08-19T19:24:05.430Z" -> null

- retention_duration_seconds = 604800 -> null

}

}

Plan: 4 to add, 0 to change, 5 to destroy.

Notice that it wants to add 4 resources and destroy 5 resources. Why? It’s because of state file management. We want to create new infrastructure by specifying the project-id which is switched from vpt-tf-sandbox-dev to vpt-tf-sandbox-test. Terraform checks our configs that we defined and it sees no references to vpt-tf-sandbox-dev and therefore wants to destroy those items. Terraform has been storing your state files as a ‘default’ workspace which we need to clean up. Using default is likely NOT what you ever want to do. DANGER DANGER — let’s clean up.

Cleanup

IMPORTANT!!! — Before proceeding to the Terraform Workspaces section below you need to destroy ALL infrastructure that has been created in the default workspace. Destroy everything you’ve created via “terraform apply”. Example shown below.

terraform destroy -var project-id=vpt-tf-sandbox-dev

# output ...

terraform destroy -var project-id=vpt-tf-sandbox-test

# output ...I’ll show you how to manage your state files via Terraform workspaces and we’ll make minor code tweaks to support workspaces. We’ll then be able to support multiple projects!

Terraform Workspaces (src: /advanced-w-workspaces)

Updated source code for the advanced workspace example can be found here at VicsProTips GitHub.

Let’s start by opening a bash terminal into the advanced-w-workspaces directory in the above source code and listing our existing Terraform workspaces.

# don't forget to terraform init

terraform init

# list the workspaces

terraform workspace list

* default

The default workspace is created … by default. In the testing we did above, each time we did a terraform apply we were updating the default workspace state files. In the intro we used ‘terraform plan’ which didn’t alter state. In the “advanced-w-variables” we altered the default state every time we applied the Terraform config.

Let’s resolve this state file issue by creating two workspaces via bash terminal as follows. Update the commands to match your project naming convention.

# create the workspaces

terraform workspace new vpt-tf-sandbox-dev

terraform workspace new vpt-tf-sandbox-test

# list the workspaces

terraform workspace list

# select the dev workspace

terraform workspace select vpt-tf-sandbox-dev

# show the active workspace

terraform workspace show

I’m now on the vpt-tf-sandbox-dev project. Before we begin applying the Terraform configs to the new workspaces we’re going to update our locals.tf file as follows.

locals.tf — updated to support Terraform workspaces.

locals {

# add support for project_id so we can swap between variables and workspaces

project-id = "${terraform.workspace}"

...

variables.tf — updated to support Terraform workspaces

Since we’re using Terraform workspaces we no longer need to use the project-id variable.

# variable "project-id" {

# NOTE: Not needed since we're using workspaces!!!!!!!!!!

# type = string

# description = "GCP Project ID to use, ie vpt-tf-sandbox-dev | vpt-tf-sandbox-test"

# }

variable "region" {

type = string

description = "Specify a region, ie, us-central1 | us-east1 | ..."

default = "us-west1"

}

variable "vpc-name" {

type = string

description = "Specify the name of the vpc to be created, ie awesome-vpc"

default = "awesome-vpc"

}Verifying Configuration “dev”

# verify we're using the dev workspace

terraform workspace show

vpt-tf-sandbox-devNow let’s apply our config.

$ terraform apply

data.google_project.project: Reading...

data.google_project.project: Read complete after 1s [id=projects/vpt-tf-sandbox-dev]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# google_compute_instance.vm_machine will be created

+ resource "google_compute_instance" "vm_machine" {

+ can_ip_forward = false

+ cpu_platform = (known after apply)

+ current_status = (known after apply)

+ deletion_protection = false

+ effective_labels = (known after apply)

+ guest_accelerator = (known after apply)

+ id = (known after apply)

+ instance_id = (known after apply)

+ label_fingerprint = (known after apply)

+ machine_type = "e2-micro"

+ metadata_fingerprint = (known after apply)

+ min_cpu_platform = (known after apply)

+ name = "vicsprotips-dev"

+ project = "vpt-tf-sandbox-dev"

+ self_link = (known after apply)

+ tags_fingerprint = (known after apply)

+ terraform_labels = (known after apply)

+ zone = "us-west1-a"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_encryption_key_sha256 = (known after apply)

+ kms_key_self_link = (known after apply)

+ mode = "READ_WRITE"

+ source = (known after apply)

+ initialize_params {

+ image = "debian-cloud/debian-11"

+ labels = (known after apply)

+ provisioned_iops = (known after apply)

+ provisioned_throughput = (known after apply)

+ size = (known after apply)

+ type = (known after apply)

}

}

+ confidential_instance_config (known after apply)

+ network_interface {

+ internal_ipv6_prefix_length = (known after apply)

+ ipv6_access_type = (known after apply)

+ ipv6_address = (known after apply)

+ name = (known after apply)

+ network = (known after apply)

+ network_ip = (known after apply)

+ stack_type = (known after apply)

+ subnetwork = "awesome-vpc-us-west1"

+ subnetwork_project = (known after apply)

}

+ reservation_affinity (known after apply)

+ scheduling (known after apply)

}

# google_compute_network.vpc will be created

+ resource "google_compute_network" "vpc" {

+ auto_create_subnetworks = false

+ delete_default_routes_on_create = false

+ gateway_ipv4 = (known after apply)

+ id = (known after apply)

+ internal_ipv6_range = (known after apply)

+ mtu = (known after apply)

+ name = "awesome-vpc"

+ network_firewall_policy_enforcement_order = "AFTER_CLASSIC_FIREWALL"

+ numeric_id = (known after apply)

+ project = "vpt-tf-sandbox-dev"

+ routing_mode = (known after apply)

+ self_link = (known after apply)

}

# google_compute_subnetwork.vpc-subnet will be created

+ resource "google_compute_subnetwork" "vpc-subnet" {

+ creation_timestamp = (known after apply)

+ external_ipv6_prefix = (known after apply)

+ fingerprint = (known after apply)

+ gateway_address = (known after apply)

+ id = (known after apply)

+ internal_ipv6_prefix = (known after apply)

+ ip_cidr_range = "10.0.0.0/16"

+ ipv6_cidr_range = (known after apply)

+ name = "awesome-vpc-us-west1"

+ network = (known after apply)

+ private_ip_google_access = (known after apply)

+ private_ipv6_google_access = (known after apply)

+ project = "vpt-tf-sandbox-dev"

+ purpose = (known after apply)

+ region = "us-west1"

+ secondary_ip_range = (known after apply)

+ self_link = (known after apply)

+ stack_type = (known after apply)

}

# google_project_service.enable-compute-api will be created

+ resource "google_project_service" "enable-compute-api" {

+ disable_dependent_services = true

+ disable_on_destroy = true

+ id = (known after apply)

+ project = "vpt-tf-sandbox-dev"

+ service = "compute.googleapis.com"

+ timeouts {

+ create = "2m"

+ update = "2m"

}

}

# google_storage_bucket.my-bucket[0] will be created

+ resource "google_storage_bucket" "my-bucket" {

+ effective_labels = (known after apply)

+ force_destroy = true

+ id = (known after apply)

+ location = "US-WEST1"

+ name = "my-bucket-vpt-tf-sandbox-dev"

+ project = "vpt-tf-sandbox-dev"

+ project_number = (known after apply)

+ public_access_prevention = "enforced"

+ rpo = (known after apply)

+ self_link = (known after apply)

+ storage_class = "STANDARD"

+ terraform_labels = (known after apply)

+ uniform_bucket_level_access = true

+ url = (known after apply)

+ autoclass {

+ enabled = true

+ terminal_storage_class = (known after apply)

}

+ soft_delete_policy (known after apply)

+ versioning (known after apply)

+ website (known after apply)

}

Plan: 5 to add, 0 to change, 0 to destroy.

Looks good. Now let’s try the configuration for test.

Verifying Configuration “test”

# switch to the test workspace

terraform workspace select vpt-tf-sandbox-testNow apply the configuration,

$ terraform workspace show

vpt-tf-sandbox-test

$ terraform apply

data.google_project.project: Reading...

data.google_project.project: Read complete after 2s [id=projects/vpt-tf-sandbox-test]

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# google_compute_instance.vm_machine will be created

+ resource "google_compute_instance" "vm_machine" {

+ can_ip_forward = false

+ cpu_platform = (known after apply)

+ current_status = (known after apply)

+ deletion_protection = false

+ effective_labels = (known after apply)

+ guest_accelerator = (known after apply)

+ id = (known after apply)

+ instance_id = (known after apply)

+ label_fingerprint = (known after apply)

+ machine_type = "e2-small"

+ metadata_fingerprint = (known after apply)

+ min_cpu_platform = (known after apply)

+ name = "vicsprotips-test"

+ project = "vpt-tf-sandbox-test"

+ self_link = (known after apply)

+ tags_fingerprint = (known after apply)

+ terraform_labels = (known after apply)

+ zone = "us-west1-b"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_encryption_key_sha256 = (known after apply)

+ kms_key_self_link = (known after apply)

+ mode = "READ_WRITE"

+ source = (known after apply)

+ initialize_params {

+ image = "debian-cloud/debian-12"

+ labels = (known after apply)

+ provisioned_iops = (known after apply)

+ provisioned_throughput = (known after apply)

+ size = (known after apply)

+ type = (known after apply)

}

}

+ confidential_instance_config (known after apply)

+ network_interface {

+ internal_ipv6_prefix_length = (known after apply)

+ ipv6_access_type = (known after apply)

+ ipv6_address = (known after apply)

+ name = (known after apply)

+ network = (known after apply)

+ network_ip = (known after apply)

+ stack_type = (known after apply)

+ subnetwork = "awesome-vpc-us-west1"

+ subnetwork_project = (known after apply)

}

+ reservation_affinity (known after apply)

+ scheduling (known after apply)

}

# google_compute_network.vpc will be created

+ resource "google_compute_network" "vpc" {

+ auto_create_subnetworks = false

+ delete_default_routes_on_create = false

+ gateway_ipv4 = (known after apply)

+ id = (known after apply)

+ internal_ipv6_range = (known after apply)

+ mtu = (known after apply)

+ name = "awesome-vpc"

+ network_firewall_policy_enforcement_order = "AFTER_CLASSIC_FIREWALL"

+ numeric_id = (known after apply)

+ project = "vpt-tf-sandbox-test"

+ routing_mode = (known after apply)

+ self_link = (known after apply)

}

# google_compute_subnetwork.vpc-subnet will be created

+ resource "google_compute_subnetwork" "vpc-subnet" {

+ creation_timestamp = (known after apply)

+ external_ipv6_prefix = (known after apply)

+ fingerprint = (known after apply)

+ gateway_address = (known after apply)

+ id = (known after apply)

+ internal_ipv6_prefix = (known after apply)

+ ip_cidr_range = "10.0.0.0/16"

+ ipv6_cidr_range = (known after apply)

+ name = "awesome-vpc-us-west1"

+ network = (known after apply)

+ private_ip_google_access = (known after apply)

+ private_ipv6_google_access = (known after apply)

+ project = "vpt-tf-sandbox-test"

+ purpose = (known after apply)

+ region = "us-west1"

+ secondary_ip_range = (known after apply)

+ self_link = (known after apply)

+ stack_type = (known after apply)

}

# google_project_service.enable-compute-api will be created

+ resource "google_project_service" "enable-compute-api" {

+ disable_dependent_services = true

+ disable_on_destroy = true

+ id = (known after apply)

+ project = "vpt-tf-sandbox-test"

+ service = "compute.googleapis.com"

+ timeouts {

+ create = "2m"

+ update = "2m"

}

}

Plan: 4 to add, 0 to change, 0 to destroy.

This looks the way we want it!

Cleanup

Be sure to destroy the created infrastructure so you don’t get a surprise bill!

# dev workspace resources

terraform workspace select vpt-tf-sandbox-dev

terraform destroy

# test workspace resources

terraform workspace select vpt-tf-sandbox-test

terraform destroy

Closing

There’s a lot of information to glean from this post and I pointed out some of the dangers associated with Terraform state management and multiple projects. The Terraform workspaces approach is a good way to utilize Terraform native functionality to manage multiple states for dev, test, and prod environments. There are options outside of native Terraform that I’ll write about in additional posts. Thank you for sticking around until the end of this one!