Managing multiple local Git repositories can be time-consuming and error-prone (ever forget a commit?). To streamline this process, I’ve created a set of Python scripts designed to automate some common Git operations. These scripts offer a powerful solution for efficiently managing your local Git repositories. By automating tasks like checking for local changes and pulling updates from remote repositories, you can significantly boost your productivity and reduce the risk of errors.

In this post, I’ll walk you through the scripts, explain their functionality, and provide step-by-step instructions on how to set them up and use them effectively.

These are some of my favorite helper scripts. Let’s dive in!

Table of Contents

What You’ll Need

- Linux environment (I’m using Ubuntu)

- local git repos (ie, in ~/git)

- Bash and Python3

Source Code

Download the source code from vicsprotips on GitHub!

Let’s Get Started!

I have these scripts split into two concepts — “git pull” and “check local git for modifications”.

Let’s review the source code for each before we proceed.

check-git.py

The check-git.py script takes a target directory (ie, ~/git) and checks every local git repo under the target for any local changes. The script outputs a list of local repos that have content modifications. This script is extremely useful for identifying any outstanding commits that need to be made across one-to-many local repositories.

# https://vicsprotips.com

# check git: search for .git folders from given root and notify you if you have changes to commit

# usage: python3 check-git.py ~/git

# Vic Baker

import os, sys, subprocess

queue = []

print(f"Checking local repos under {sys.argv[1]}")

for root, dirs, files in os.walk(sys.argv[1], topdown=False):

for d in dirs:

if d == '.git':

print(f"{root}")

cmd = f'cd {root}; git -c color.status=always status'

returned_output = subprocess.check_output(cmd,shell=True).decode('utf-8')

if 'nothing to commit' not in returned_output:

queue.append(root)

print(f'{root}\n{returned_output}')

print('\n\nSummary:')

for q in queue:

print(q)mp-git-pull.py

The mp-git-pull.py script checks the remote for every local git repo under a specified target (ie, ~/git) and performs a git pull on each. This script utilizes python multiprocessing to perform the tasks in parallel for a significant performance boost over the single process alternative (see git-pull.py)

In summary, the script below takes a target dir as the argument (ie, ~/git) and builds a queue of local repos found under the target. The queue of local repo paths is then sent to a multiprocessing pool (in this case, the pool size will default to the number of cores), and perform a git pull for each local repo. This script is fantastic when dealing with many repositories frequently being updated (ie, think CI/CD or working with teams of developers across multiple repos).

# https://vicsprotips.com

# git fetch (multi-process): search for .git folders from given root and pull origin for each

# much faster than non-mp version

# usage: python3 gf.py ~/git

# Vic Baker

import multiprocessing

import subprocess

import os,sys

import requests

def doWork(params):

# check provided dir for git updates

try:

dir = params

# print(f"processing: {dir}")

cmd = f'cd {dir}; git -c color.status=always pull'

returned_output = subprocess.check_output(cmd,shell=True).decode('utf-8')

return f"{dir}:{returned_output}".strip()

except Exception as e:

return f"{dir}: unable to pull -- local changes?".strip()

if __name__ == '__main__':

rootDir=sys.argv[1]

import os, sys, subprocess

queue = []

print(f"Generating queue for {rootDir}")

for root, dirs, files in os.walk(rootDir, topdown=True):

if ".git" in dirs:

queue.append(root)

print("Queue created. Starting pool")

pool = multiprocessing.Pool()

results = pool.map(doWork, queue)

pool.close()

print("Subprocess pools done!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!")

c = 0

updates = []

for r in results:

if not r.endswith("Already up to date."):

c = c + 1

updates.append(r.split(':', 1)[0])

print(r)

if len(updates) > 0:

print("\n\nThe following repos had updates:")

for r in updates:

print(r)

Next, we’ll walk through setting them up for you to use in your Linux environment via cloning and creating aliases.

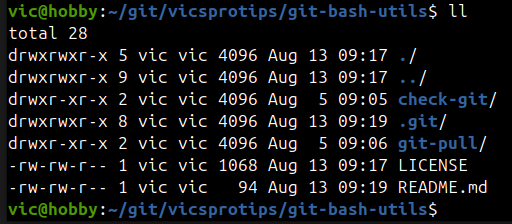

Cloning the scripts

Clone the scripts from vicsprotips GitHub and put them in your ~/git folder. I’ve put mine under ~/git/vicsprotips/git-bash-utils. It should look something like this:

Adding aliases to ~/.bashrc

Next, we need to add the following aliases to your ~/.bashrc file so that the aliases will be active every time you start a bash terminal.

# add the following to your ~/.bashrc

alias gp="python3 ~/git/vicsprotips/git-bash-utils/git-pull/mp-git-pull.py ~/git"

alias cg="python3 ~/git/vicsprotips/git-bash-utils/check-git/check-git.py ~/git"

Save the changes to your ~/.bashrc file. You can now either close and reopen each terminal window, or, from within each bash terminal type source ~/.bashrc which will activate the aliases in the current terminal.

Note: You can call the gp or cg aliases from anywhere and they’ll check EVERY git repo under your target (~/git). They are not dependent upon which directory you’re in when they’re called. The scripts work from the ~/git target we set in the alias — adjust that for your use case if different.

Using the git-bash-utils

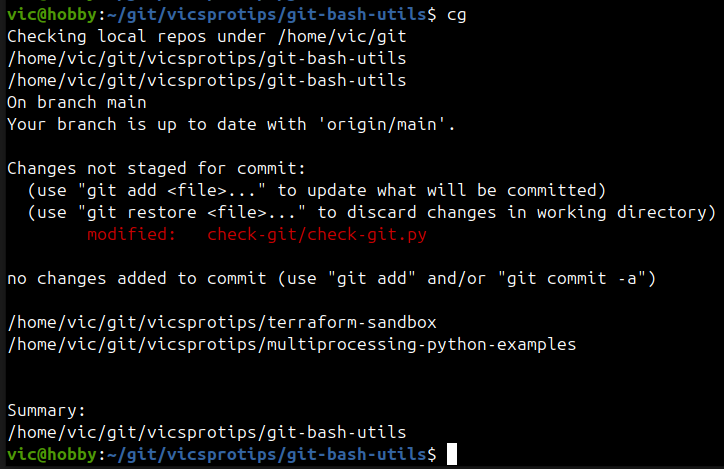

cg — ‘check git’

From your bash terminal window type cg to check your local repos for any outstanding local modifications that have been made to your git repos. The script will output a summary of repos with local changes. As shown below, the script scans local repos under the target set in the ~/.bashrc alias and detects that I have an outstanding commit to the check-git.py script (I added a comment at the top of the modified file).

The summary section shows a full path for the repo(s) with local changes. You can then handle each local repo modification via your regular git processes (ie, git commit, etc) NOTE: The cg script only alerts you of local changes detected.

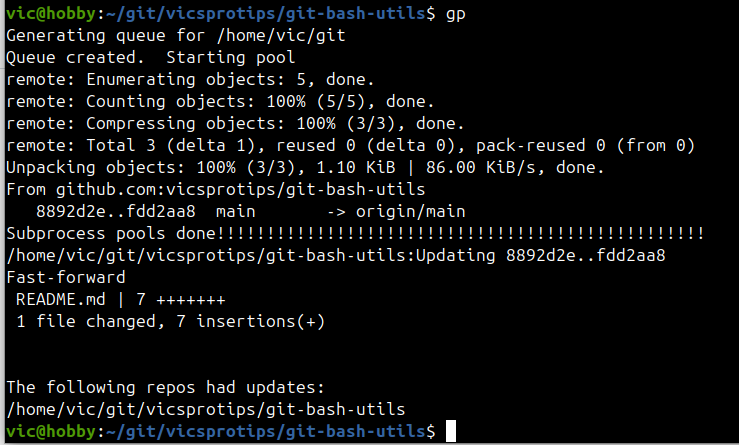

gp — ‘git pull’

Now let’s see the gp alias in action. In our alias, we set ~/git as the target for the script to work with and check the remotes for every local repo under our ~/git folder. The output below shows that the script detected and pulled a remote change.

In Closing

We’ve walked through an approach to getting the scripts setup in your local environment. Someday I might write a convenience script but I think it’s worthwhile for us to walk through things together. Hopefully, you’ve learned something and you find these scripts as useful as I do.