Python multiprocessing is an incredibly powerful tool to leverage for processing a queue of items that do not depend on one another. In this exercise I’m going to provide a base python multiprocessing example that will serve as a foundation so that you can build more complex workloads as needed. I’m also proving a slightly more advanced version to build upon using tuples.

Download the Example Source Code:

Download the the source code here.

What you’ll need:

- A code editor (I’m using Visual Studio Code)

- A Python3 installation (on your workstation or via a VS Code Dev Container)

- Note: I’m running on Linux (Ubuntu)

Let’s Begin Python Multiprocessing!

Let’s start with a base level multiprocessing program. The program will take a list of 10 numbers [1,2,3,4,5,6,7,8,9,10] and perform a processing task on each element of the list, independent of the other items in the list.

####################################

# Multiprocessing baseline example #

# https://vicsprotips.com #

# Vic Baker #

####################################

import multiprocessing

def doWork(params):

"""

Each item from the queue will be processed here

"""

try:

data = params

print(f"doWork is processing: {data}")

return data

except Exception as e:

return f"Error processing {data}: {e}"

if __name__ == '__main__':

# define a queue with a range of numbers

queue = list(range(1, 11))

print(queue)

print("Queue created. Starting pool")

pool = multiprocessing.Pool()

results = pool.map(doWork, queue)

pool.close()

print("Pool done! Printing results:")

for r in results:

print(r)

Let’s walk through the above example. In the main section of the code we define a queue as a list of integers [1,2,3,4,5,6,7,8,9,10]. We then define a multiprocessing pool that will (by default) use all of the cores available to the machine running the code.

pool = multiprocessing.Pool() # use all available cores

# vs

pool = multiprocessing.Pool(2) # use 2 coresOnce the pool is defined we then begin processing the elements of the queue by the telling python that we want each of the items in the queue to be processed by the ‘doWork’ function. In this example queue is a list of integers however pool.map supports more advanced structures that I’ll demonstrate later.

results = pool.map(doWork, queue)The ‘results’ will be a list consisting of the return values of the doWork function that we will iterate over and display the output.

Let’s now look at the def doWork(params) function. This function is used to process each individual element of the queue, one element per call to doWork(). In this example we’re getting the item, printing it, and returning the integer as the result of the processing.

def doWork(params):

"""

Each item from the queue will be processed here

"""

try:

data = params

print(f"doWork is processing: {data}")

return data

except Exception as e:

return f"Error processing {data}: {e}"The first thing we do is parse the params field. In this example recall that ‘params’ represents a single element of the queue (which in our example, each element is an integer). Python will make a call to doWork for every item in the queue. Later I’ll provide an example of a more complex ‘params’ field.

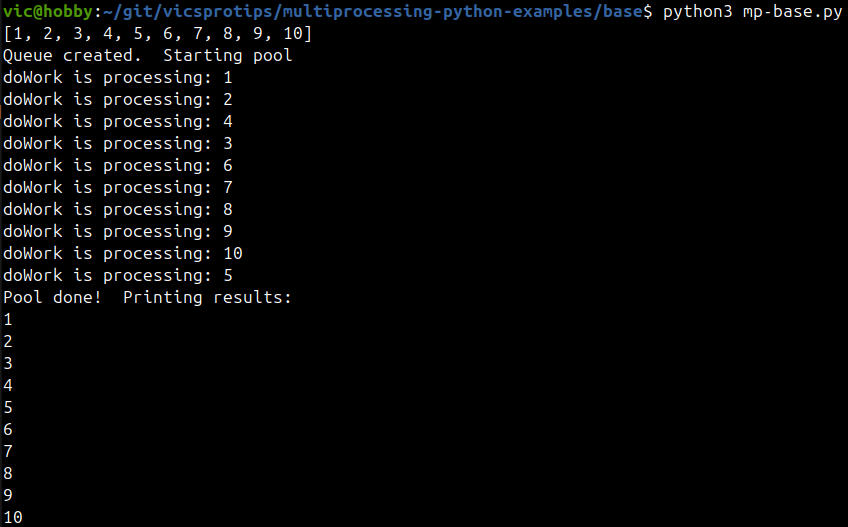

Let’s run the python multiprocessing code:

Running the code from a bash terminal outputs the following:

Here we see where we created and printed the queue contents in the main function, then we see the output of doWork as it processed each item, and finally we see that the pool is done and we iterate the results list. Notice that the order of doWork processing is not guaranteed however the results are in the order they were sent in.

Processing a Queue of Tuples for Python Multiprocessing:

Let’s look at a slightly more advanced python multiprocessing example that uses a queue of tuples consisting of numbers and letters. The complete code is below then we’ll talk through the differences and the output.

#############################################

# Multiprocessing tuples example #

# https://vicsprotips.com #

# Vic Baker #

#############################################

import multiprocessing

def doWork(params):

"""

Each item from the queue will be processed here

"""

try:

number = params[0]

letter = params[1]

print(f"doWork is processing: {params}, number = {number}, letter = {letter}")

return f"{number}-{letter}"

except Exception as e:

return f"Error processing {params}: {e}"

if __name__ == '__main__':

# define a list of tuples

numbers = [1, 2, 3, 4, 5]

letters = ['a', 'b', 'c', 'd', 'e']

queue = list(zip(numbers, letters))

print(queue)

print("Queue created. Starting pool")

pool = multiprocessing.Pool(3) # defining how many cores to use, blank defaults to all available

results = pool.map(doWork, queue)

pool.close()

print("Pool done! Printing results:")

for r in results:

print(r)In main we’re defining a list of tuples that consist of an integer and a character. Also in main we’re specifying how many cores to use in the pool. Next, in doWork we’re parsing the params tuple to get the integer and character that define the tuple element in the queue list. Finally we perform some simple processing on the input params and return some value based on the data.

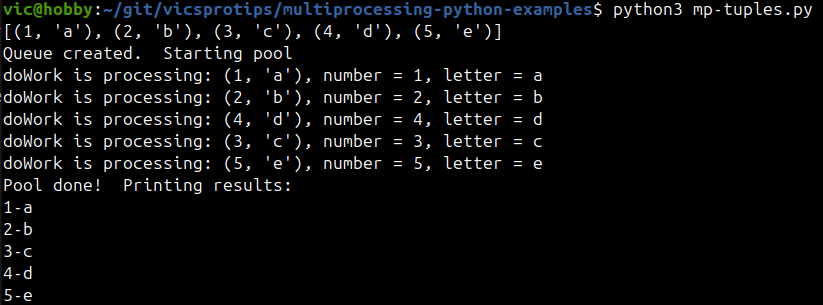

Let’s run the code and look at the output:

As in the prior example, we create and print the contents of a queue, except this queue is a list of tuples. Next we called doWork and see the out of order execution of the pool processing. Finally, we print the aggregated results returned from the pool.map call.

In Closing:

We’ve covered some basic examples of multiprocessing with python that will give you a foundation on which to build more complex examples, for example maybe you have a list or urls that need parsed, or need to get a file listing from many directories, or programmatically calculate pi. Regardless of your use case, hopefully these examples will help you out!